Intro to Machine Learning (UD 120): Online Course Review

Thu Apr 3 2025 (E: 2025 Nov 15)

View 3 comment(s).- Course Quality and Differences from Formal Education

- Final Project: Persons of Interest at Enron

- Time to Try a Paid Course?

I got curious about the ever-present and annoying systems that collect data off me and try to sell me things. I’m the type of person who browses the web with javascript and gpu acceleration disabled. Even before I sprouted a web presence, I had wondered what the algorithms were doing wrong.

I took Intro to Machine Learning (UD 120) on Udacity both for a glimpse into the subject of machine learning and to see how good Udacity is. UD 120 is one of the free courses. Judging from the course material, there used to be a certificate associated with the course but not anymore.

Note: this blog was drafted as I was taking the course before I let anyone (or thing) at Udacity know I had my own blog: before I even filled out the website field in my account profile. So I have no incentive to say anything nice.

The git repository of class projects is available on GitHub.

https://github.com/udacity/ud120-projects

The course covers a substantial amount of topics about supervised machine learning:

- Naive Bayes

- Support Vector Machines

- Decision Trees

- K-Nearest Neighbors or Ensemble Methods

- Regressions

- Outlier Detection and Removal

- Clustering

- Feature Scaling

- Feature Selection

- Text Learning

- Principal Component Analysis

- Validation

- Evaluation Metrics

If you have a Python environment and understand middle school level

math (remember y = mx + b?), this course should be easy

to follow. There are some fancy terminologies involved, but they can

describe otherwise simple logic.

If I am at the beach and most of the people around me are sunburnt, then I am sunburnt as well (regardless of my skin type, where I am standing, and so on).

— Nearest K-Neighbors

There’s a relationship between x and y, and it’s a straight line. (You probably saw me in high school, but if you didn’t take statistics, we didn’t meet.)

— Linear Regression

If someone put all of the words used in (famous book here) into a list then wrote something with a word palette constrained to that list, then I’m going to think they are (author of famous book) themselves regardless of the order, pattern, or rhythm of the words.

— Naive Bayes

I am literally a tree data structure where each node represents a decision at a certain threshold.

— Decision Trees

Course Quality and Differences from Formal Education

If you’re going to drop a few hundred bucks on a certificate from an unaccredited “education platform”, get an idea of how things are, right?

Intro to Machine Learning is assessed to be 3 months long. Since it is self-paced, it’s possible to finish in a shorter span of time. It took me 6 weeks from start to writing this blog. Other than the self-paced nature of self-learning, this course differs from a conventional education in that …

- The instructors are actually prepared to teach in an online environment.

Each video lecture was performed on a digital canvas (hand and pen is a transparent layer). Captions are available in multiple languages for each video.

To contrast, depending on the instructor hired at a real school, remote learning can involve a guy streaming from his phone camera over Zoom in a dimly lit room.

the psychological validation of a green checkmark each time a lesson or quiz was completed.

An instance of ChatGPT (Udacity AI) that is specifically trained on the course material is available to answer questions.

No “homework assignments”— lesson plans involve less drilling or repetition.

You might recall grinding exercises from a textbook. To repetitively solve problems so you memorize the formulas for next morning’s quiz. In Intro to Machine Learning the quizzes are for testing and building intuition. They also are not graded; however, Udacity AI can be asked to generate exercises problems.

Overall, I approve of the course’s design at least for employed students or anyone who needs a flexible schedule. That doesn’t mean I would recommend the style of instruction to anyone that needs repetition or supervision.

As this course is free, we can reasonably expect it to be unmaintained, buggy, crap, (or politely, with lesser effort involved). So here’s what you might have to deal with that hopefully will not be the case for a paid course:

- some materials still use Python 2

- mini-projects that don’t result in the answer expected by the quiz

- the multiple choice questions can bug out: its possible to get marked wrong for the right answer

- grading scripts on

some quizzes will return errors for

joblib(nothing to do with the student code)

When an answer is submitted, the grading script either returns

positive feedback (the answer is correct) or negative. The negative

feedback will hint or indicate the possible error behind the wrong

answer. When the grading script complains about joblib,

the feedback given may always be negative, returning the interpreter

complaint about joblib that students have no control

over.

But if you can bear all this, you get a course led by Sebastian Thrun, the founder of Udacity himself. He has a European accent and uses the wrong words at times, but hey, everyone makes mistakes.

Two of the Lessons are Out of Order

When I took this course, it hadn’t been updated since 2022. From the looks of some timestamps, the materials have been around since 2015.

The two lessons Feature Scaling and Text Learning are out of order. Feature Scaling is introduced before Text Learning; however, the mini-project for Feature Scaling relies on the mini-project for Text Learning to have been completed.

The Feature Scaling mini-project will ask for the vectorizer from the Text Learning mini-project to be executed and modified. The lesson Feature Scaling will also discuss stop words and the TFIDF Vectorizer without introducing them. The two are introduced in Text Learning; therefore, skip to the lesson Text Learning before going through Feature Scaling.

Final Project: Persons of Interest at Enron

https://notabug.org/scuti/ud120-projects/src/final-project

My final project can be run with:

python poi_id.py— exports decision tree model by defaultpython poi_id.py knn— k-nearest neighbors

The final project of the course uses the “Enron data set”. Enron was an American energy trading corporation that became bankrupt at the end of 2001 because of a financial scandal arising from accounting fraud. The goal of the final project is to train a model to predict the people of interest among innocent|irrelevant employees.

The original grading rubric for the final project is no longer available, but ChatGPT appears to have some of the details about it.

Me:

for the final project, is it required to get a true positive on tester.py?

ChatGPT:

In the context of your final project, achieving a true positive on tester.py is important, but it is not strictly required to get a passing score. The purpose of tester.py is to evaluate your model’s performance based on various metrics, including true positives, false positives, true negatives, and false negatives.

Me:

is it okay to get true positives on random_state’s different from 42 on StratifiedShuffleSplit

ChatGPT:

Yes, it is perfectly okay to get true positives when using different values for random_state in StratifiedShuffleSplit. The random_state parameter controls the randomness of the data splitting process, which means that changing its value can lead to different training and testing sets being created each time you run your code.

This blog will double as my documentation of my final project.

Here, I will try to get true positives without changing the

random_state parameter for StratifiedShuffleSplit, a

cross-validator from sklearn.1

The bad guys coordinating financial fraud were probably talking to each other regularly. The average innocent Joes and Janes, not so much.

— my intuition

- feature – a characteristic of a subject used in training and testing a model; in this case, an employee of Enron has features such as salary, bonus, emails sent to people of interest, and so forth.

(rubric: create new features, select features, scale features)

For this project I derived a new feature from:

from_poi_to_this_personfrom_this_person_to_poito_messages(messages received by an employee)from_messages(messages sent by an employee)

… in order to represent the level of correspondence. The exact formula is roughly

ratio_sent_to_poi = from_this_person_to_poi / from_messages

ratio_got_from_poi = to_this_person_from_poi / to_messages

multiplier = 5

((ratio_got_from_poi * multiplier) + ratio_sent_to_poi)/2The factor is assigned arbitrarily after trial and error. It means receiving a response from a person of interest is more important than sending to a person of interest. I assume, for example, lower level employees won’t be talking to big shots all that often.

In addition to the “score” on correspondence, the secondary feature

used was shared_receipt_with_poi. This also relates to

messaging, that is the number of messages also addressed to a person

of interest.

shared_receipt_with_poi is a number anywhere from 0 to

several hundreds while the “score” on correspondence is an average

between two ratios. Therefore, the values are normalized (“feature

scaled”) to numbers between 0 and 1.

(I did not use any automated feature selection. I just came up with something and it ended up working.)

(rubric: data exploration, outlier investigation)

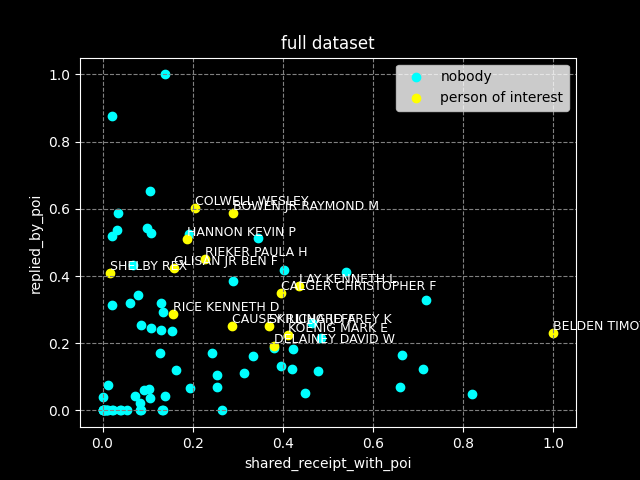

Here are the visualizations my project generates. The first image is of the full data set which helped me tweak the feature I created and decide on my approach. A cluster around (0,0) most likely consists of ordinary employees. Any point near (0,1) might have been executive assistants. Around (0.4, 0.3) and (0.2, 0.5) is where people of interest cluster.

There are also some nearby points that would be potential false positives or the ones who got away, depending on how you look at it. Even though the blue point that nearly overlaps with “HANNON” isn’t a person of interest, if I were an investigator, I would look into him or her. I don’t mind the false positives if the machine learning algorithm was used to quickly determine a list of suspects that would get cleared after an investigation by a human.

I don’t remove “BELDEN” from the data set as an outlier because he appears to have little effect on model performance. Therefore, the only thing removed from the data set was the spreadsheet quirk “TOTAL” (not an actual person, just the totals calculated).

(rubric items: discuss validation)

A classic error in validating a model’s performance is performing a train-test split on sorted data. This would result in a model trained on the pattern within the data set such as values in ascending order. In this case, the data set was sorted alphabetically by last name which was irrelevant enough to the features used.

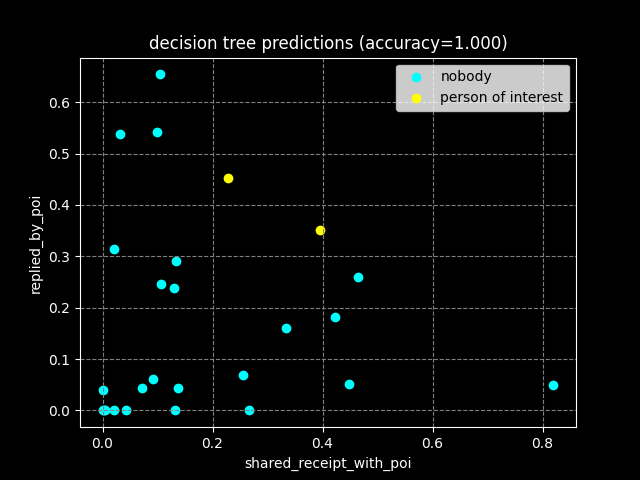

(rubric: pick an algorithm, usage of evaluation metrics, validation strategy)

The algorithm that had the best result was a decision tree. Before, I had tried the k-nearest neighbors algorithm (KNN). Given one testing set (30% of the data withheld from training), an algorithm only needs one true positive to be >90% accurate.

Both algorithms yielded accurate models; however, between the two, KNN was less suspicious than a decision tree. Ultimately, upon cross-validation, KNN was labeling false positives far more than true positives.

Accuracy: 0.78167

Precision: 0.07934

Recall: 0.09100

F1: 0.08477

F2: 0.08840

Total predictions: 9000

True positives: 91

False positives: 1056

False negatives: 909

True negatives: 6944

The decision tree initially was much more suspicious. Even when it was less accurate, labeling more people as POI meant a better chance at precision, recall, f1, f2 scores > 0.

At min_samples_split=6, the decision tree impressively

labeled all entries in the testing set correctly then scored the

following using tester.py (the script used for

grading):

Accuracy: 0.89367

Precision: 0.52034

Recall: 0.55000

F1: 0.53476

F2: 0.54380

Total predictions: 9000

True positives: 550

False positives: 507

False negatives: 450

True negatives: 7493

The model is almost 90% accurate which sounds almost good, but there are roughly as many false positives as true positives.

(rubric: discuss parameter tuning, tune the algorithm)

min_samples_split=6 is the minimum number of samples

at a node required for an algorithm to create a new decision/leaves. I

found values by using a numerical for loop; however, they don’t always

yield better scores upon cross-validation. The ranges on the for loop

were determined by looking at the visualization of the full data set.

n is within the range of the total number of POIs

available in the data set.

showing results of K-nearest neighbors algorithm

n, accuracy, precision, recall

----------------------------------------------

2 0.962 1.000 0.500 <--

3 0.923 0.500 1.000

4 0.885 0.000 0.000

5 0.923 0.500 0.500

6 0.923 0.500 0.500

7 0.923 0.500 0.500

8 0.962 1.000 0.500 <--

9 0.962 1.000 0.500 <--

showing results of decision tree

n, accuracy, precision, recall

----------------------------------------------

2 1.000 1.000 1.000 <--

3 1.000 1.000 1.000 <--

4 1.000 1.000 1.000 <--

5 1.000 1.000 1.000 <--

6 0.923 0.500 0.500

7 1.000 1.000 1.000 <--

8 0.923 0.500 0.500

9 0.923 0.500 0.500

10 0.923 0.500 0.500

11 0.923 0.500 0.500

12 0.962 1.000 0.500

13 0.962 1.000 0.500

14 0.885 0.000 0.000 The final project involves binary classification: the model picks either “person of interest” or “irrelevant nobody”. My model has an F1 score of 0.50 which isn’t amazing. If the data set was balanced, the model is about as good as a coin flip. For the provided data set, people of interest were ~12% of the total number of employees. Therefore, the model is a few times better than a random guess. A way to further improve this model would be to add another dimension, e.g some consideration of salary, bonus, and long term incentive on to a z-axis. That is…

The bad guys coordinating fraud are stinking rich, paying themselves big bonuses, and won’t have long-term incentives because they know the ship is going to sink when the jig is up.

That’s it with the documentation and free response portion of the final project. If the course still had an actual person grading my writing, I’m sure it would have been annoying to grade everything out of order.

Time to Try a Paid Course?

Intro to Machine Learning (UD 120) is a free online course that provides decent video lectures and a data set to work on. However, there is some jank with the quizzes. The course is otherwise well-structured and organized if it weren’t for the reversed order of two lessons.

The programming tasks in the course were straightforward.

scikit-learn (also known as sklearn) has

consistent names for the methods of their classifiers. The starter

code provided to students works without modification with the

exception of tester.py in the final project.2

While I don’t have a strong recommendation for or against the course, I find it preferable to a completely unsupported learning experience (a book and a search engine). For the subject material, having a data set provided and discernment between right and wrong answers helps a lot even though some of the quizzes bug out.